How to increase detection accuracy and improve successful recognition

Contemporary video surveillance software solutions (or VSS for short) usually offer various detectors that analyze the video feed coming from the camera in real time. As the sought-for objects or actions have different peculiar traits, the criteria to find them naturally differ too. Besides, internal mechanisms of modules doing different tasks might also be completely dissimilar. As a result, similarly-looking modules might be scanning the camera video stream for different things. For example, object recognition tools are on the lookout for object types that act and look similar to patterns from its ‘knowledge’, while a motion detector is simply seeing if image pixels change.

Successful detection accuracy in VSS depends on whether the module in question has the right conditions to do its job. The perfect conditions thus depend on the type of job: for the above mentioned object recognizer it’s seeing the object clearly from the side, and for motion detector it’s not having any ‘excessive’ motion in frame that overshadows the ‘real’ motion.

So how do you improve detection accuracy and successful recognition rate in VSS? There might be some advice on how to positively affect detection accuracy which mainly address circumstances in the way of better performance. All advice can be generally divided into 3 categories:

Probably the first thing to look at if you are willing to increase detection accuracy and successful recognition rate is the camera position.

Most neural-networks-powered modules use their knowledge base (or ‘training’) as a reference to what this or that object or action looks like. The module then scans the video stream for objects at some intervals, and compares them to the patterns the sought-for objects are supposed to be compliant with, according to its previous training. The training usually embraces practices of how the desired objects can be better distinguished by a computer from the overall scenery. More likely than not this means requirements to the camera position that will coincide with training data of such smart modules, and aid in better performance.

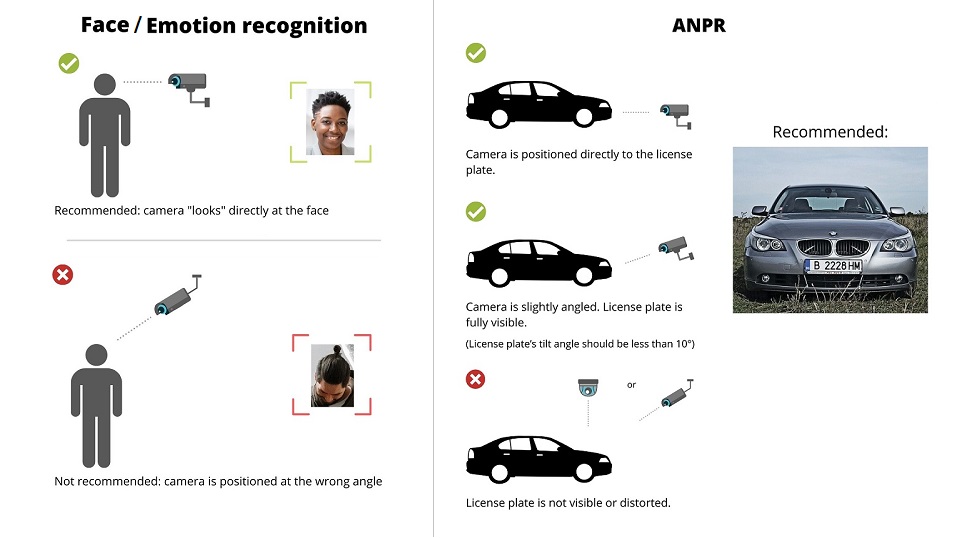

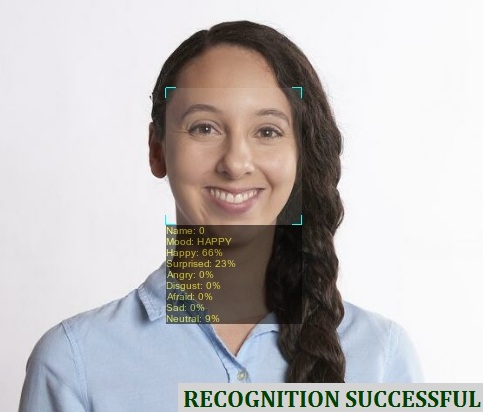

Indicatively, the best position for higher accuracy of Face / Emotions / Facial Mask recognition is facing as straight into the face as possible. Now, advanced VSS can still recognize people if their heads are sideways at some reasonable angle; however, if the goal is to get a higher recognition rate, you are advised to try a position as close to the recommended as possible. Similarly, VSS’s ANPR utilities perform best when cameras face license plates at an angle as close to 0° as possible.

For Crowd Detector, passengers counting and queue analysis, however, the best camera mounting would be at the top, seeing clearly people’s heads instead. For Sports Tracking, or Object Recognition, it’s usually the sideways view that brings the best results. And the Motion detector showcases best results when it faces the scenery in the way that there are no redundant motion events in the to-be-monitored zone (for example, no car headlights where you are expecting home intruders to arrive from), unrelated to the camera angle.

So the conclusion would be this: it’s always better to consult the module guide on how to use this module to get the best detection accuracy.

|

Various features have various requirements as to how to properly position a camera

|

It’s only logical that the PTZ Sports Tracking module cannot work with a camera that does not have PTZ functions. The Face Recognition will not be recognizing people at night if the camera can’t make out anything in the dark, and the Fisheye warped video feed might have objects distorted to the point that they no longer satisfy AI sample patterns to be recognized. These examples are all united by the fact that some of camera settings or properties might have direct effect on successful recognition rate and detection accuracy.

What to do: to increase detection accuracy, the camera properties imperfections need to be addressed, for example, by placing more lights or turning the IR night mode to facilitate better vision at night; or properly dewarping the Fisheye cam feed (for example, with Xeoma’s Fisheye Dewarping module).

|

Distortion of objects on edges in Fisheye cameras might have a negative effect on recognition and detection accuracy

|

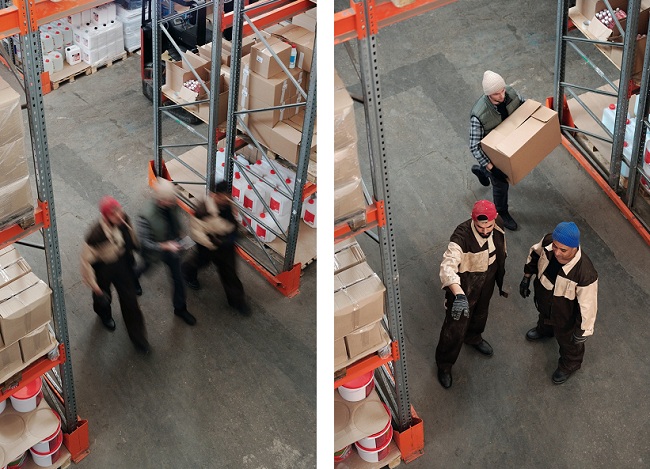

Motion blur is the apparent streaking of moving objects in a sequence of frames, such as in a video camera feed. Mid-motion blur is a typical cause of issues with detection accuracy, and it’s very critical to reduce the effect. If still shots of your camera stream depict moving objects blurred mid-motion, ‘ghosted’, it might – and probably will – have a negative effect on successful recognition rate. Computer vision usually requires a relatively sharp image to do the real-time analysis where each frame counts.

In terms of artificial intelligence, motion blur is usually caused by 2 things:

1) insufficient shutter speed on the camera

2) insufficient bitrate set for the stream

Shutter speed should be compliant with how fast the objects are moving: the faster the object is going, the faster shutter speed should be to be able to ‘capture’ it. Shutter speed and motion blur are inversely proportional. In this light, one shutter speed could be sufficient to capture strolling people, and insufficient to recognize plates of moving cars, or a sports ball rocketing across the field.

|

Motion blur is a serious hindrance of best detection accuracy

|

Here are some other contributing setting that might help fight motion blur effect:

Insufficient bitrate of the video stream might have a negative effect on accuracy of recognition because it is bound to cause image distortions. Besides, inadequately low bitrate is unable to comprise all the details in the scenery which might be critical in recognition done by machine vision.

The general formula for adequate stream bitrate is

Camera Bitrate in bits / (Image Width x Image Height x Stream fps)

The resulting value should lie in the 0,1-0,15 range. If it does, the camera bitrate is considered adequate in most cases, and should not result in motion blurs. Here, however, we must make a remark that the concept of normality might fluctuate according to the scenery content, abundance of colors and motion events in the frame.

Combating blurring of moving objects might have a miraculous effect on increasing detection and recognition accuracy.

One camera that worked for one task might not be ideal for another, so if nothing else helps consider changing a camera for a model that has higher shutter speed, image quality, more features that prop better detection accuracy in your case. Video surveillance software Xeoma has multifaceted optimization practice utilized for artificial intelligence modules that are usually considered quite CPU heavy, so hardware overload is rarely the case. However, for processes like fire recognition, FaceID, etc. where latency is critical, it is advised to have server equipment that can keep up with computations on the fly.

Xeoma VSS is famous for its flexibility where one task can be typically accomplished in several ways. You can ask our tech support and customer care if there is another way to accomplish your task that will probably work better for you. Moreover, we might do additional training for artificial intelligence modules that will ensure that it yields desired recognition rate in your special environment (for example, if safety helmets in your site have an unusual color).

With great power comes great responsibility. Video surveillance software like Xeoma has dozens of out-of-this-world next-gen possibilities of machine vision, but more often than not they need proper conditions for best performance. Follow the recommendations for best camera position and properties in your VSS, make sure that camera image is crisp and clear, and it might help you get rid of the question of how to increase detection/recognition accuracy forever.

About Xeoma:

Xeoma is the video surveillance software for all tasks related to camera vision, from video security to automation of business operations, from smart homes to customers analysis.

Xeoma’s strong sides are simple interface with a modular structure, flexible turnkey settings, affordable prices, quality service and professional functions ranging from recognition of license plates, faces, emotions, gender and age, object and sound types, abandoned or missing items, to loop recording, parking and safe city solutions.

Xeoma offers a completely free version without ads, a trial version for testing, and commercial versions for any budget.

Equally perfect both for private use, and for large enterprise-level systems. See more and get free trial in Xeoma page

You can request free demo licenses for Xeoma here. Enter your name and your email to send the license to in the fields below, and click the ‘Get Xeoma free demo licenses to email’ button.

We urge you to refrain from using emails that contain personal data, and from sending us personal data in any other way. If you still do, by submitting this form, you confirm your consent to processing of your personal data

June, 9 2022

Read also:

Additional modules in Xeoma

Xeoma Full User Manual

Reducing CPU load: Full guide