Emotion Recognition

Humans express emotions using a variety of means: gestures, body language, tone of voice, etc. However, the predominant method is, of course, facial expressions: a furrowed brow, a wrinkled nose, a wide smile – these can tell us plenty of their owner’s state of mind, constituting one of the pillars of non-verbal communication. Each of us learns to recognize such patterns (basically, intuitive behavior analysis) starting from the first time we open our eyes and, eventually, it becomes our second nature.

Now that we know that AI (specifically, neural networks) is capable of learning too, the inevitable question must be asked: “Can it learn to interpret human emotions?” Moreover, can it learn to do so faster than we do? The answer is, undoubtedly, yes, but do take it with a grain of salt: as mentioned before, facial expressions are not the only way to show emotions, so analyzing only the face cannot give 100% accurate results.

As of version 18.11.21, Xeoma includes the module Face Detector (Emotions) that works in conjunction with Object Detector. It checks the picture for faces and attempts to gauge the overall state of mind for each of them using 7 measures:

- happy

- surprised

- angry

- disgust

- afraid

- sad

- neutral

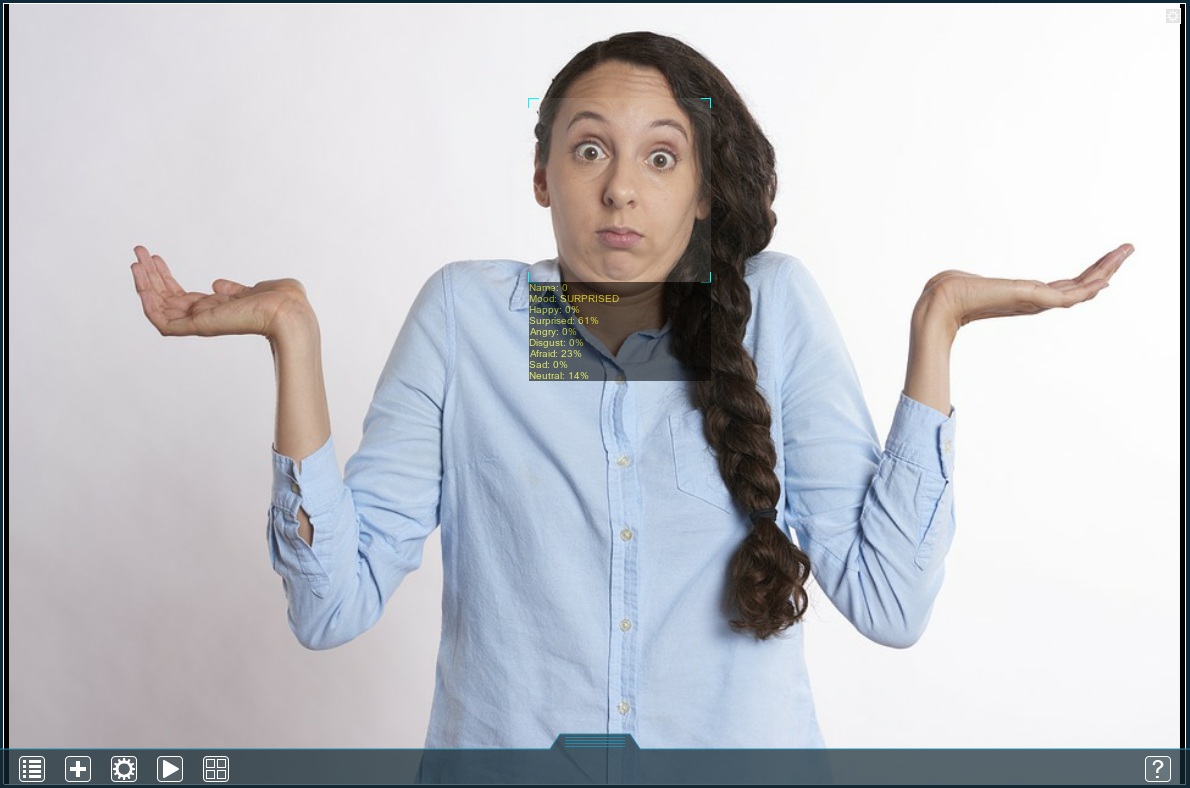

Each of these shows up on a detected face with a varying degree and is assigned a percentage (0-100) based off that degree. Whichever one of the above seven scores the highest percentage is accepted as the dominant state – Mood. All calculations will be showed on screen, though, so lower percentages are also visible. Here are some examples:

The mood has been detected as Neutral, however, a hint of sadness also did not escape the detector’s attention (23%).

The mood has been identified as Surprised. Notice that the second highest score comes from Afraid (23%) – this is quite common, as facial expressions for these 2 emotional states have plenty in common. The detector can observe the difference, however.

You may have noticed before that among the parameters emotion recognition shows under a face there is also a Name – that is a number assigned to each face currently in the frame (starting with 0). Here there are 2 faces, so they are numbered 0 and 1. The full faces are also not always necessary to get an accurate reading. The mood is obviously Happy, no real challenge.

Here is a somewhat more complicated case:

The hair partially obscures the face oval, facial hair is also present, the frame includes objects in close proximity that have the same color as the face, yet are not part of it (hand) – all of this complicates the recognition. Still, the detector’s verdict is Surprised.

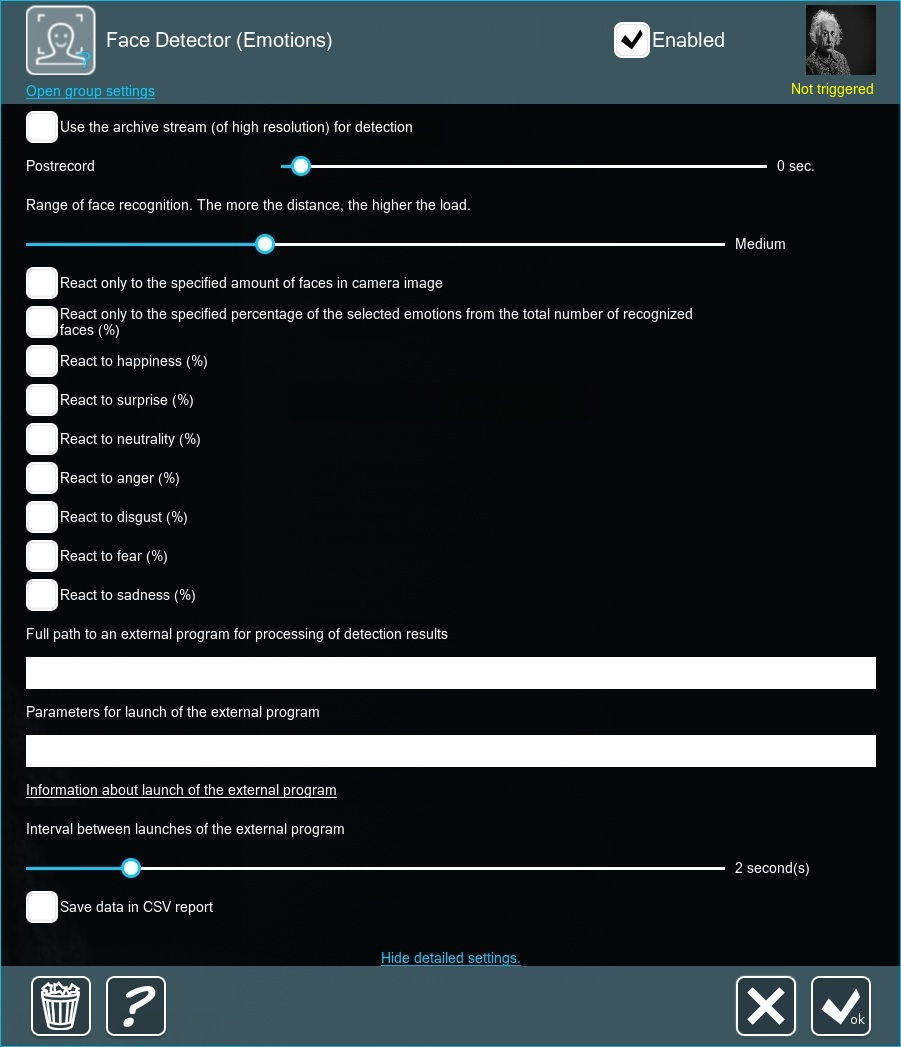

Now let’s have a closer look at the module’s settings:

Use the archive stream (of high resolution) for detection – allows the detector to analyze the archive stream instead of the preview one (here is why they should be different).

Postrecord – indicates how long the archive should keep recording after the detector stops reacting.

Range of face recognition – sets how far a face could be from the actual camera; basically, the smaller the face – the higher the slider.

All React-boxes are where the module’s true functionality starts. Each emotion has a separate box but they are counted together. If you are familiar with computer logic, you can view the checked boxes as connected with logical AND (or conjunction). If not, here is how it works:

- If only one emotion is selected – the detector will react only to that emotion and ignore all others;

- If 2 emotions are selected – the detector will react only to faces that exhibit BOTH these emotions;

- If 2 emotions are selected – the detector will not react to a face that expresses only one of the selected emotions.

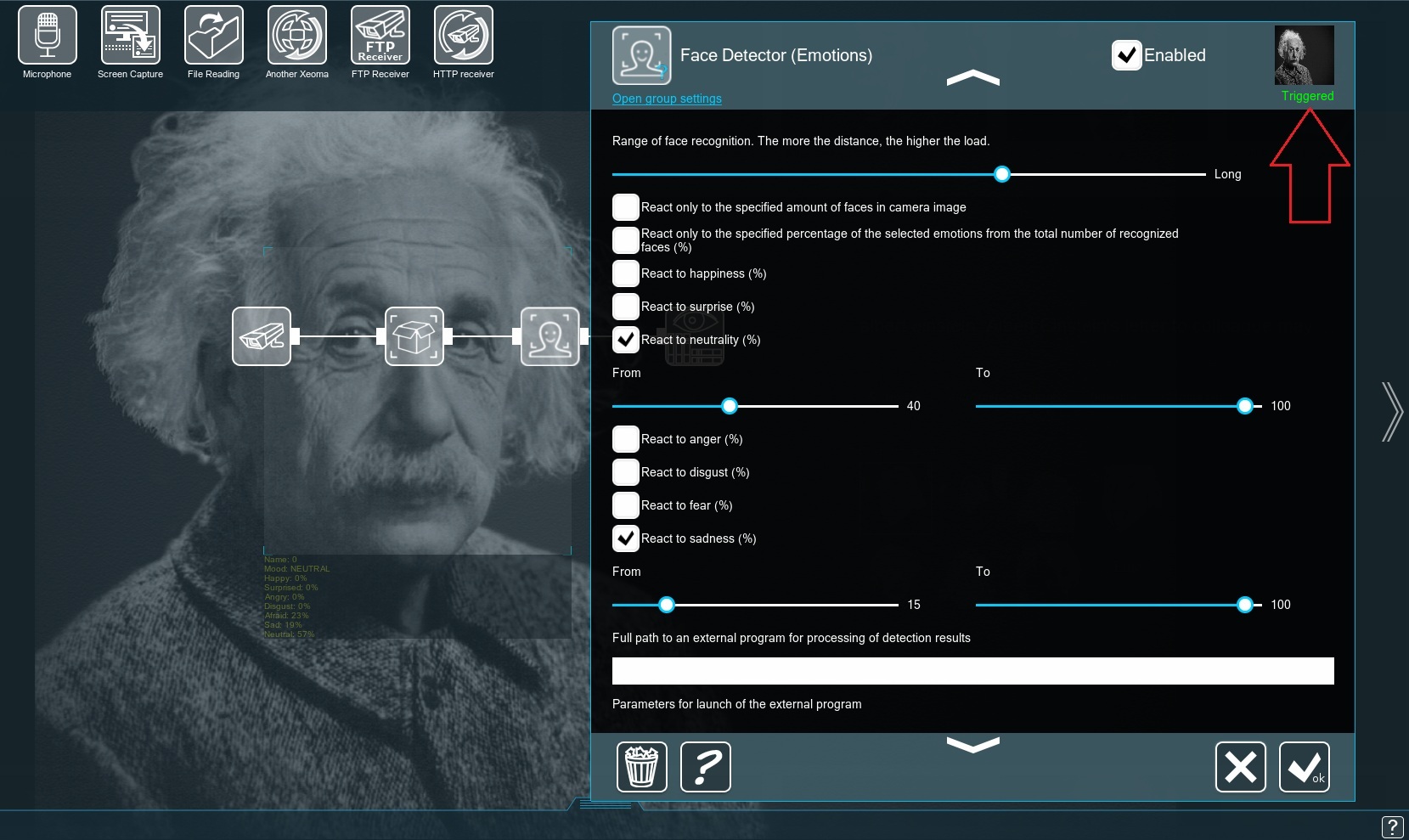

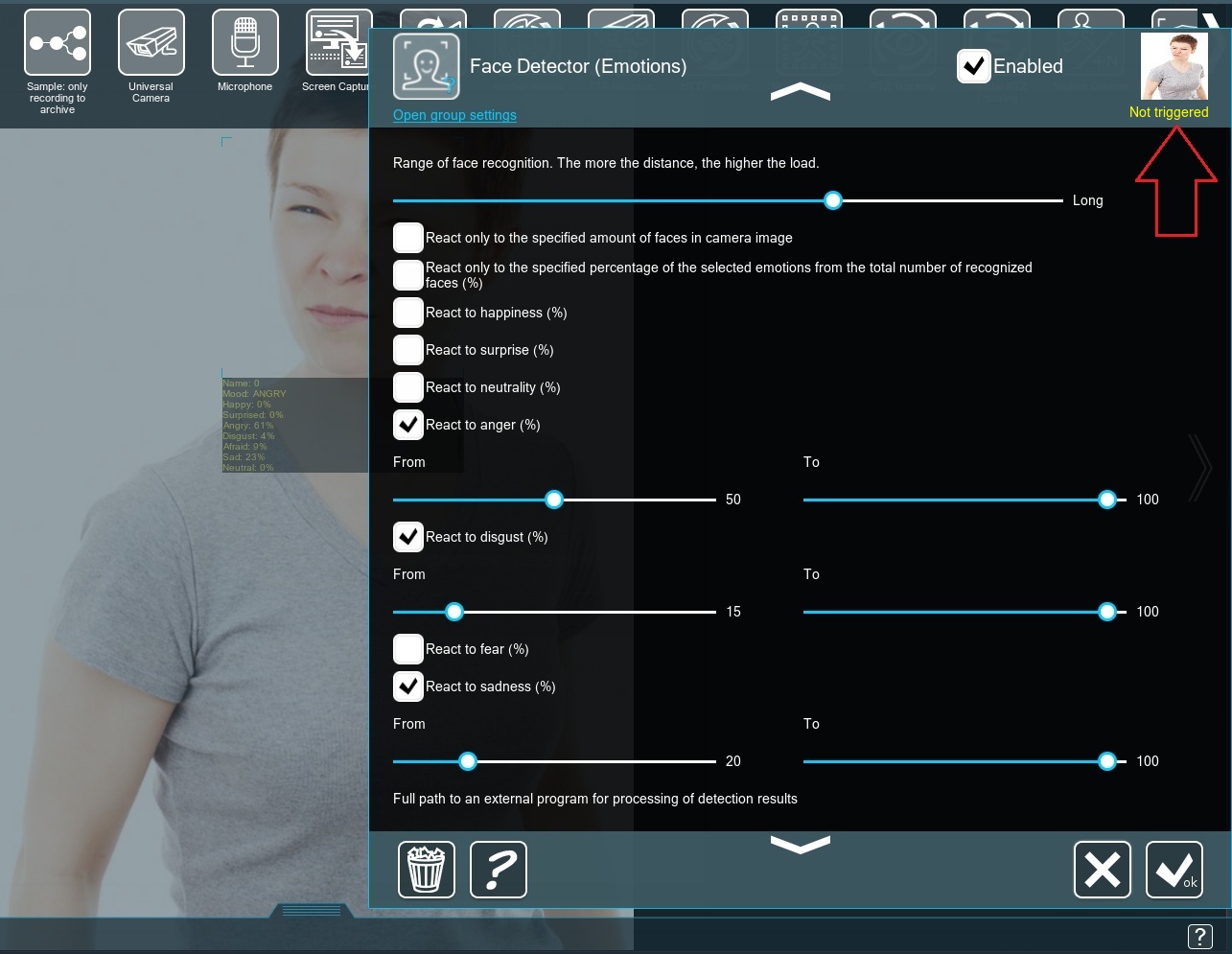

Each of these boxes, when checked, displays 2 sliders to adjust the minimum and maximum percentage to react to. This is best illustrated by examples:

Here emotion recognition checks only for neutrality and sadness. The face’s neutrality is 57% (fits between 40% and 100%); sadness is 19% (fits between 15% and 100%) – thus the detector is triggered, both conditions are met.

Here the detector looks for 3 emotions: anger, disgust and sadness. The face’s anger is 61% (fits between 50% and 100%); disgust is 4% (doesn’t fit between 15% and 100%); sadness is 23% (fits between 20% and 100%) – thus the detector is not triggered, since only 2 of the 3 conditions are met (anger and sadness).

This logic works with any combination of emotions. However, there are a couple of things that are unlikely to yield good results:

- Looking for opposite emotions at the same time – e.g. happiness and anger almost never show up on a single face, so if both boxes are checked the module is unlikely to ever react to anything at all.

- Looking for all emotions at the same time – as expressive as a human face can be, there is a limit to what it can show; no face can exhibit all 7 emotions simultaneously, so checking all boxes would also prevent the module from reacting to anything at all.

React only to the specified amount of faces in camera image – indicates how many faces (minimum and maximum) needs to be in the frame for the detector to react.

React only to the specified percentage of the selected emotions from the total number of recognized faces – this makes the module more statistics-oriented: it will check all faces in the frame (up to 200) for the selected emotions and calculate the percentage of those that fit the requirements; if that percentage fits the sliders you set up – the detector reacts. In short, it can show how many of the people currently in the frame are happy or sad and so on. Such data is paramount for behavior analysis.

Full path to an external program for processing of detection results – this exists for integration purposes: you can make a script or database that will store and manage the detector’s findings and put the path to the program that opens the script/database into this box (e.g. Python, PHP, etc.).

Parameters for launch of the external program – works in conjunction with the previous box; here is where you put the path to the actual script/database (e.g. my_script.py, emotion_database.php, etc.) and tell Xeoma what parameters to pass on to it using macros. You can check the whole list of these parameters in Information about launch of the external program.

Interval between launches of the external program – sets how often the script/database can be used; since a single face may linger in the frame for some time, you may not need to send the info on it several times, so this interval can be set higher.

Save data in CSV report – creates a file in the specified directory that will log the detector’s every reaction for future (or immediate) analysis.

The possible applications for emotion recognition are quite diverse: crowd control, road safety, statistics gathering and, of course, retail marketing. In terms of crowd control, a large number of faces with a high percentage for angry or afraid measures can serve as an indicator of a fight or an uproar. In a car: a system that constantly monitors the driver’s emotional state can serve as a calming factor to prevent road rage cases and as a preventative measure against falling asleep at the wheel. Statistics and marketing go hand-in-hand: knowing how often a client expressed positive emotions while observing certain merchandise can show a marketer whether its position is well-chosen (catches the eye) and if it has potential and relevancy for the clients of the chosen target audience. This is particularly valuable for new merchandise.

It goes without saying that a combination of high-speed processing neural networks and behavior analysis is a cutting-edge technology, one that is expected to grow and refine itself with incredible pace. We are excited to follow this trend and bring you the very fruits of this study.

DISCLAIMER: No faces or emotions were harmed in the making of this article. All faces are properties of their respective owners.

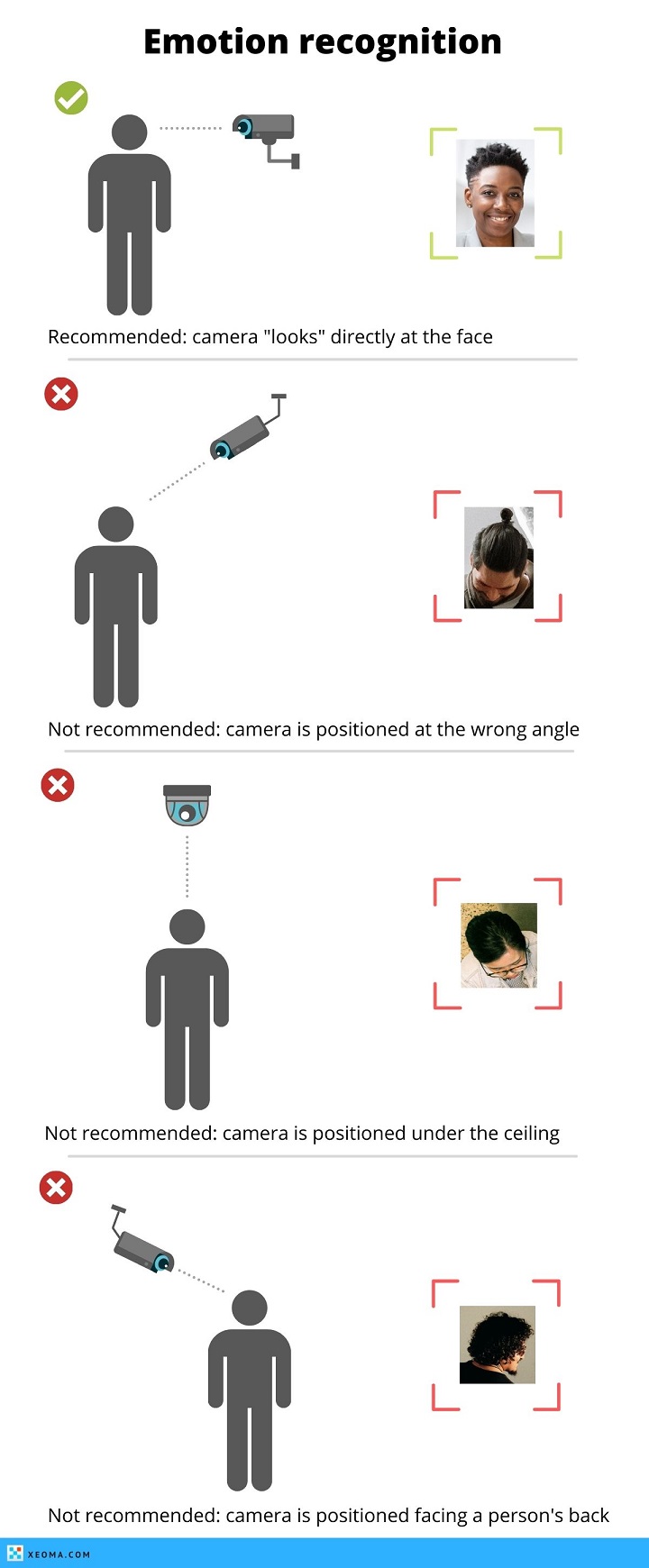

How to install the camera to use Emotion recognition

Here are the ways to increase the successful recognition rate:

• You can place the camera as close as possible to the area where you need to detect emotions in (preferably at right angle to the face)

• Place camera at right angle, face should occupy a large part of the frame

• Lighting should not be very dim or with a lot of flashes (you can use special HLC (High Light Compensation) cameras (often marked ‘For LPR/ANPR’))

• Use long-focus objective for a better view

December, 24 2018

Read also:

Face Recognition

Dynamic Face Blur